Executive Summary

Firmographic data, like industry classification, employee count, and location, is notoriously messy and challenging to analyze. Modern databases offer a unique opportunity to clean and maintain this data: by leveraging an LLM, you can synthesize industry information for your accounts directly within a SQL query.

I recently took on exactly this challenge, so this isn’t an abstract solution. I’m using generic data below for data privacy reasons, but this is a real-world solution for a real-world problem I solved for a RevOps team.

h/t to Jacob Matson who demonstrated the underlying concept in MotherDuck:

Problem 1: Maintaining Firmographic Data is a Challenge

One dreaded question for analysts on the sales or revenue team: “quick qq: Can I get our sales broken out by industry?” This question often signals a few late nights spent cleaning up industry data to provide a rough answer to stakeholders who, meanwhile, are wondering, “Why is this taking so long? Don’t we already pay for firmographic data?”

The never-ending hamster wheel of maintaining firmographic data is a universal challenge. There’s a reason why Dun & Bradstreet took in $2.3B in revenue in 2023 providing this data: it’s not easy to aggregate or keep up to date.

Problem 2: Overwhelming Industry Categories

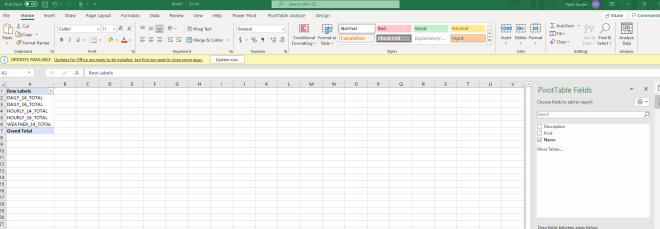

There is another visible failure point in the chart above: an excessive number of industry categories.

A hidden aspect of any industry cleanup project is narrowing down to a manageable list of 10–15 industry categories. Leave the intricate sub-industries for later—they’re harder to get right, demand 10 times the maintenance effort, and are rarely used. Only address them when there’s a strong business case to do so.

Solving Problem #2, First

Before we can get to the neat LLM-in-SQL work, we need a clear list of industries to ask the LLM to leverage. Usually you want to make this list in collaboration with business, but GPT-4o can generate a solid starting point as well. Here’s the final list I landed on after refining GPT-4o’s starting point to match my preferences::

- Technology

- Healthcare & Life Sciences

- Financial Services

- Retail & Consumer Goods

- Manufacturing & Industrial

- Education

- Public Sector & Government

- Energy & Utilities

- Real Estate & Construction

- Transportation & Logistics

- Hospitality & Recreation

- Professional Services

- Nonprofit & Social Impact

- Media & Entertainment

Keep in mind, this isn’t a trivial task if your company hasn’t tackled it seriously before. There are a near-infinite amount of industry breakdowns out there, so you need to give purposeful thought to how to group industries based on your own customer base. Your list will likely differ from` others.

Solving Problem #1 Using an LLM in SQL

Now that we have an industry list, it’s time to ask an LLM to help. You could always upload that CSV to ChatGPT and ask it there, but that requires manual steps (download csv / upload to GPT / download csv / upload to DWH) which could get in the way of automating this in the future.

But Snowflake gives us the option to ask an LLM these questions directly in SQL, directly using context from our CRM and other data. Let’s take advantage!

For the test, I found 25 companies in my local area and wrote down only their names. Some are national brands or chains, while others are small mom-and-pop shops and everything in between. This gives me a list like the one below:

OK great! Now we need to get the syntax for querying an LLM in Snowflake. There’s plenty of documentation covering the available LLMs and functions, and the specific function we want to use is Complete. I tested all of the LLMs in Snowflake for this task, and the most consistently accurate one with reasonable processing times was llama3.1-70b, so we’ll use that LLM for now.

And here were the results. They are … uh … about what one expects on a first try. Overly verbose.

OK, time to do some “Prompt Engineering” to get this correct, because getting a paragraph for a response is incredibly not helpful.

Specifically, I added a line saying: Only return the industry in each response, I want no other text or explanations.

This works well! Very limited extra text, but it isn’t really categorizing like I want it to. For instance, I consider the After Image Styling Salon to be a Professional Service, not a Retail/Consumer Good.

The LLM would benefit from additional context for each industry for decision making. This is exactly what I would do if giving this task to someone at work. Given ambiguity, both a person and an LLM make arbitrary decisions.

Here’s the adjusted query:

The context helped! Notably, I found additional improvement when I described specific decision points in the text. Once I told the prompt that places like Auto Repair and Beauty were Professional Services, it correctly categorized them. Lesson being, if you are sure a specific company type should be in a specific industry, be explicit.

Here’s the new output:

Is this perfect? No. but it’s a great start given that it’s working solely off company name and several of these are small or family-owned.

Overall:

- 16 of the 25 I consider correct or nearly correct (72%)

- 7 of the 25 I consider incorrect (28%)

Not a bad hit rate for a few minutes of an LLM prompt, with room to improve!

I get why names like “Taco Book” threw the LLM for a loop. That’s a local taco shop, but with the name “Book” in the title and very little online about the company, I get why the coin flip ended up on the side of Retail & Consumer Goods.

What next?

I foresee potential to use additional CRM data to make this approach even more accurate. Company descriptions, headquarters data, sales emails, etc. If it can get to ~7/10 off of name alone imagine the possible improvement with more refined prompt engineering combined with extra data context would .

For example, if you had information in your CRM about Taco Book as a restaurant, the LLM would pick up the industry rather quickly.

This feels like an application of LLMs in SQL that’s only scratching the surface of what is possible. RevOps teams everywhere may soon have a tool to update that pesky CRM data, and surely there are other use cases for this across other domains.

I’d love to hear from you if you are working on something similar!